Egomotion Estimation Using Binocular Spatiotemporal Oriented Energy

Abstract

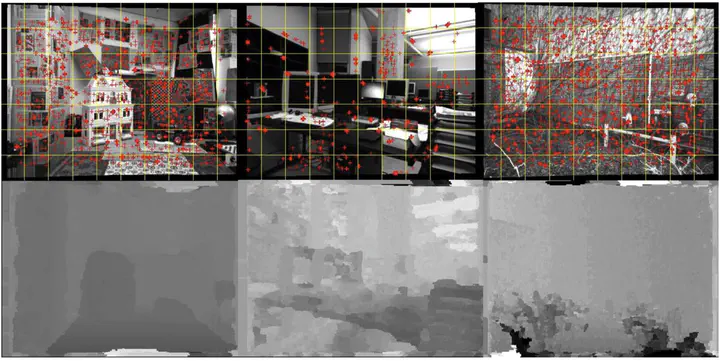

Camera egomotion estimation is concerned with the recovery of a camera’s motion (e.g., instantaneous translation and rotation) as it moves through its environment. It has been demonstrated to be of both theoretical and practical interest. This paper documents a novel algorithm for egomotion estimation based on binocularly matched spatiotemporal oriented energy distributions. Basing the estimation on oriented energy measurements makes it possible to recover egomotion without the need to establish temporal correspondences or convert disparity into 3D world coordinates. The resulting algorithm has been realized in software and evaluated quantitatively on a novel laboratory dataset with groundtruth as well as qualitatively on both indoor and outdoor real-world datasets. Performance is evaluated relative to comparable alternative algorithms and shown to exhibit best overall performance.